Generative AI

Tackling Infrastructure Challenges, Domain-Specific LLMs, Workforce Disruption and the Future of Jobs

Hello there,

Today, we'll explore several pivotal aspects of generative AI. Let’s dive right in…

AI Infrastructure: Bridging the Gap

The gap between AI capabilities and industrial applications continues to grow. As generative AI progresses, current infrastructures struggle to keep up.

One striking example here is attempting to apply ChatGPT to the internal review process within the medical system. Something as mundane as the review of documented referrals would be strictly off limits due to compliance reasons because we’ve designed our systems (e.g. our review software) for people as end consumers, not machines.

Benn Stancil’s piece "The rapture and the reckoning" highlights the issue in the design of a data warehouse schema and the unconventional thinking of shifting from once-strict principles.

In similar vain, one possible idea is to rethink how we design software and start designing patterns for AI, specifically for LLMs. By optimizing engineering for AI rather than humans (for example, creating code readers for AI rather than for human eyes), we can potentially revolutionize data consumption, analytics engineering, and data reliability, transforming the data industry.

JPMorgan's $12B investment in data emphasizes the need to rebuild data infrastructure for banking. At its surface, the investment is too large and the goal is too broad. Upon hearing the news, Wall Street Analysts, perhaps rightly, downgraded the stock. But if we, for a minute, consider the possibility of an AI-native banking infrastructure as well as the $10Billion Microsoft recently invested in Open.AI, this JPMorgan investment starts to seem in a new light and, maybe, paves the way for entirely new applications, products, and software.

Domain-Specific LLMs: Enhancing Accuracy and Reliability

LLMs inherently lack consistency typical of traditional computing. A slight non-material change in words as an input to the prompt can result in an orthogonal meaning within the produced output.

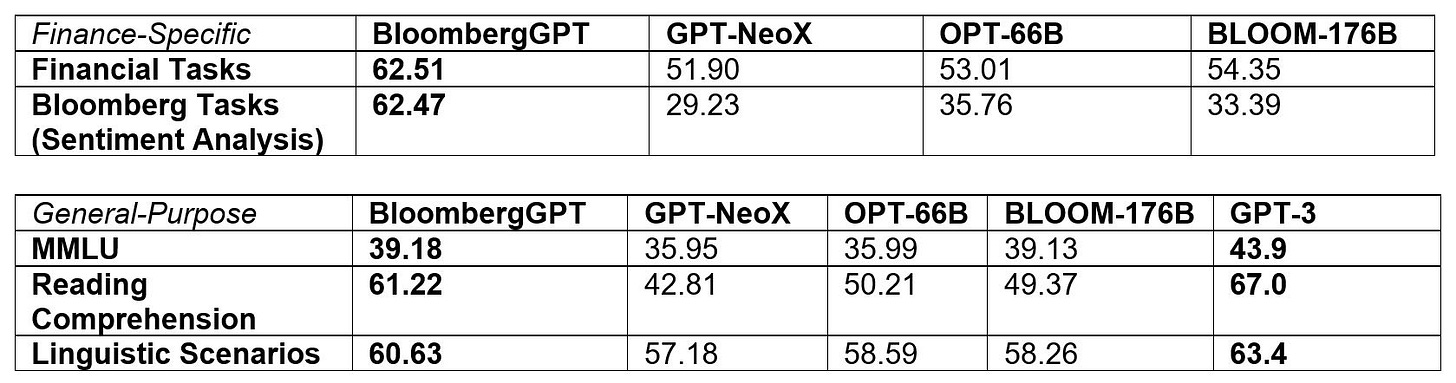

Developing domain-specific LLMs is a potential solution, as it narrows prediction range and improves output accuracy. For example, Bloomberg recently released a finance-tuned LLM, indicating maybe the beginning of a new trend.

To fully unleash generative AI's potential, we must tackle infrastructure challenges and adopt domain-specific LLMs for more accurate and reliable outcomes.

Workforce Disruption and the Future of Jobs with AI Integration

Workforce Disruption Technological advancements have typically resulted in an increase in specialized roles. As tasks become easier to perform, more people are drawn to them.

A recent meme sparked discussions about job replacement.

Contrary to popular belief, my thinking is that jobs won't disappear but will evolve. For instance, while low-skilled social media content writers might be replaced, we could see an emergence of highly skilled writers leveraging AI to enhance their work.

Where AI's potential indeed lies, it is in replacing decision-making roles where systematic judgment is preferred (such as that of political leaders). I think we will continue to need the creative elements of everyday profession, but for sure we’re going to see less need for low-skill labor on platforms such as Fiverr, where talent will have to become much more skilled in relying on AI in order to continue wining work contracts.

Is StackOverflow now a giant wiki for LLMs?

Maybe. But interestingly, highly paid engineers often don't share portfolios, as their code is locked within corporate environments like FANG. Engineers at start-ups are more likely to share their code. As another example, data analysts are evaluated based on their thought process during interviews, with FANG talent often cruising through on the strength of their work experience. Basically, publicly available code portfolios are largely available from groups that actually need them to get a good job.

So what all of this means is that code that is accessible to today’s LLMs maybe is not all that sophisticated - or not fully representative of the latest and greatest. And while it might be the case that current LLMs know everything about Python, some other domains (such as areas in Analytics, Data Science or Research) might be entirely proprietary and off limits to current web crawlers.

A key question is whether AI can write better SQL than data analysts. Acquiring domain knowledge is crucial, and AI needs access to private data sources to excel in specific domains, like that of writing SQL. Overcoming this obstacle requires addressing non-AI issues before generic AI applications can access and utilize domain knowledge. The future of LLMs relies on integrating retrieval-based NLP with generative models, refining LLM communication, and finding ways to equip AI with domain knowledge.

One area where this is happening is in the attempt to decouple LLM’s semantic understanding from facts. This can be achieved by combining retrieval-based NLP with generative models. Databrick’s own Matei Zaharia articulates in his interview with Patrick Chasethat the future success of LLMs in AI depends on addressing challenges like teaching new facts and improving communication with these models. Matei’s research focuses on combining traditional retrieval-based NLP with generative models and pulling work out of LLMs into code. External indexes can store knowledge for LLMs, while initiatives like #gpt_index facilitate this pattern. The Demonstrate-Search-Predict framework allows engineers to be more explicit when instructing LLMs.

Stay tuned for more insights into the world of data and AI.