Developing AI Applications

Taking a closer look at AI feature development lifecycle

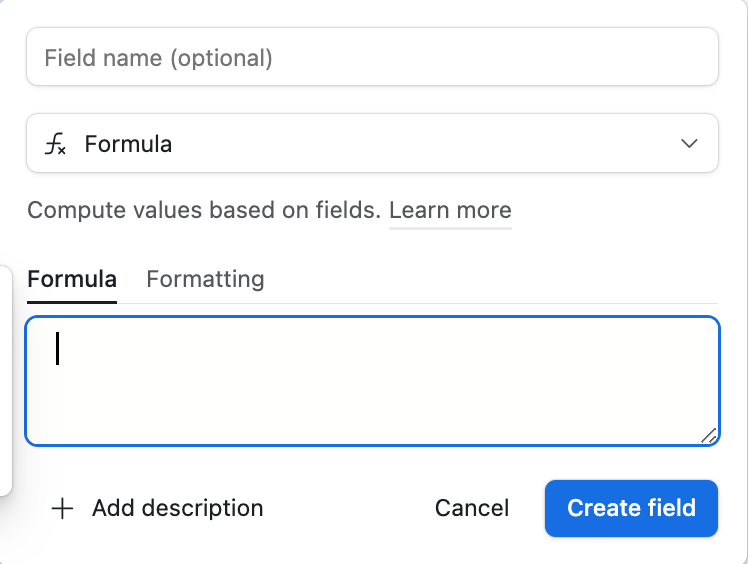

Those of us who grew up learning Excel MACROS had a moment of instant gratification when back in March of 2024, Airtable announced the release of several AI features. Finally, it seemed AI would take care of the pain Excel caused so many.

But the moment was fleeting. Today, no one I know who actively uses Airtable has knowingly subscribed to their AI features. Yours truly had tried their AI formula suggestions for a month, and walked away unimpressed.

The experience, while unusual for a company with such a strong product DNA as Airtable, is actually quite representative of the broader problem trying to introduce AI as features by the incumbent players.

Product ideas that are obvious on the surface, fail in real life as AI capabilities feel raw and unpolished.

To name a few, Asana and my own alma mater, Looker, are currently facing similar challenges. Product ideas that are obvious on the surface, fail in real life as AI capabilities feel raw and unpolished.

AI vs Traditional Product Life Cycle

I was speaking to a potential customer the other day about AI Product features, and we arrived at a new notion: AI engineering needs to have a separate timeline from Product Feature Development.

Consider traditional engineering-based product development lifecycle:

Identify and validate customer needs

Create and refine an MVP

Beta test and prepare for release

Plan early adoption

Market

Gather feedback and scale

Note that something is missing here. The whole item list is about minimizing Go-to-market and adoption risk.

What’s missing is that AI’s value proposition is actually most of the time obvious enough that it is not the source of adoption risk. Consider a similar list for a typical introduction of an AI feature set:

Identify the problem and scope (AI)

Assess technical feasibility and gather data (AI)

Train and validate (AI)

Create raw model / technology prototype (AI)

Test and make changes (AI)

Create and refine an MVP (Product)

Beta testing and prepare for release (Product)

Market (Product)

Gather feedback and scale (Product)

Note how only the last 4 items are similar, while the first 5 are entirely new R&D / AI items that did not exist in the traditional product development. And so, I would argue, the risk in AI product feature adoption is from technical capabilities, not from Go-to-Market (as in traditional product development).

The risk in AI product feature adoption is from technical capabilities, not from Go-to-Market.

AI is hitting a Wall

Those who have been paying attention will know what I’ve been publicly saying for months now (and privately for several months before that) - namely that AI is going to hit a wall. This idea finally seems to have quietly gained general consensus (as noted by CEOs of Scale.AI and Databricks) and did not require us to wait for an entire year as I originally suggested:

While potentially bad for dumb capital allocators (cough--Softbank--cough) who essentially give AI companies $$ to buy NVIDIA’s GPUs, the development is really good for the rest of the ecosystem. Finally, we can treat OpenAI, Anthropic’s Claude, and the rest of them as a commodity services, that they are, and stop debating who has a better model (Spoiler: they do not compete on quality).

And what that means is that as an ecosystem we have to become less dependent on artificial boosts in the quality of output from Large Language Models, and start building quality AI end-user applications.

Basically, we cannot continue introducing AI into Products as we normally would other features. Instead, every AI application development team needs to be viewed as a R&D lab. An R&D lab whose end output is a technology / model / methodology / framework feeding inputs into the new product development lifecycle.

Why Airtable?

Those who follow this blog for awhile will note that when it comes to data, I typically like to talk about Snowflake, Databricks, and my own alma mater, Looker. I also occasionally cover other business Intelligence tools.

What very few know is that I am secretly a spreadsheet nerd. And I don’t mean just an average IB Analyst-level Excel junkie. From Macros to entire BI applications on top of Spreadsheets, I’ve done it all. I’ve integrated spreadsheets with databases. I’ve built entire loan underwriting platforms on top of Spreadsheets. I’ve probably found more ways to hack spreadsheets than the original developers ever intended. So when Airtable came along and introduced its AI formulas, I was “all in” for it.

But looking at AI in the context of spreadsheet is important for other reasons as well. Today, there are thousands of conversations happening around the traditional Data Stack and the introduction of AI capabilities into it. Databases and BI tools market new features. Integration platforms pitch to investors their big visions about uniting the world of tools. Everyone has grand AI visions.

And many of these pitches, no doubt, are indeed directionally accurate. The only problem is that when it comes to technology, directional correctness is not enough.

AI products are like vectors. Vectors require both direction and magnitude. In some markets, magnitudes are obvious; in others, an incorrect magnitude is a difference between a billion $ outcome, and a complete failure; analytics is one such market. Hundreds of teams have attempted to build Unicorn companies, but only Tableau and Looker have succeeded in breaking through the billion dollar exit. These two companies had the correct vectors - both in direction and magnitude.

Perhaps in other markets the difference in magnitude is not as severe. Maybe it is totally OK for Asana to have a crappy AI Helper interface. Or maybe a developer in India will copy all of Asana’s functionality, cut the price in 1/2 and introduce a really well fine-tuned AI helper interface. Would that be enough for me to exit out of my Asana subscription. Probably…

-SG Mir

Head of AI, Data, and Product